TV stars and terrorists may seem to have little in common. But after watching YouTube videos by members of a violent terrorist organization, Yotam Ophir realized that the two groups used similar tactics to connect with distant audiences. The terrorists dressed casually, looked into the camera when they spoke, and recounted their pasts in a fascinating, plot-driven manner, just like the actors.

When Ophir presented that theory in class as a junior at the University of Haifa in Israel, his teacher, communication scholar Gabriel Weimann, was so impressed that he encouraged Ophir to publish on the idea. This resulted in Ophir’s first academic paper, published in March 2012 in Perspectives on terrorism.

“I think [that paper] it opened the door to him, both outside and inside him, inside his mind,” says Weimann, now at Reichman University in Herzliya, Israel.

Since then, Ophir has remained intrigued by how different people—whether terrorists, policymakers, journalists, or public health officials—communicate information and beliefs to wider audiences. The past 20 years have dramatically changed the way we interact with media, says Ophir, now a communications researcher at the University at Buffalo in New York. “All my research is about people’s efforts to cope with the insane and ever-increasing amount of information that now surrounds us 24/7.”

Ophir is particularly interested in understanding how disinformation—a topic he is writing a book about—seeps into fields such as health, science and politics. “I hope our work can help [people] understand … what stands between people and the acceptance of … evidence,” says Ophir.

How the media covers epidemics

Ophir did not set out to become a communication researcher. “I wanted to be a musician,” he says.

But an introduction to mass communications class during her freshman year — also taught by Weimann — set Ophir on a new trajectory. On the first day of class, Weimann told the story of Jessica Lynch, a wounded American soldier who was supposedly captured by Iraqi fighters. Weimann showed the class the seemingly dramatic video of Lynch’s rescue. The video and the media frenzy surrounding its release had turned Lynch into a war hero.

But the portrayal was misleading. Lynch was not shot or stabbed as initially reported. And Iraqi soldiers had already abandoned the hospital Lynch was in by the time the US military arrived. Journalists, who had not witnessed the “rescue,” relied heavily on a five-minute video clip released by the Pentagon. A damning BBC inquiry later called the events “one of the most astonishing pieces of news management ever conceived”.

Ophir was taken aback by the way the entire staged operation appeared – made to look like a “Hollywood movie” – and the resulting media spin. “It touched a nerve and I was like, ‘Wow, I need to know more about this,'” he says.

Ophir went on to earn a master’s degree at the University of Haifa, studying how fictional characters can influence people’s beliefs. In 2013, Ophir transferred to the University of Pennsylvania for a PhD in the lab of communications researcher Joseph Cappella, who focused on the tobacco industry. Ophir first investigated how cigarette companies entice people to buy products known to cause cancer and other health problems.

But his focus changed in 2014 when an Ebola outbreak began sweeping West Africa. Ophir swallowed news about US medical personnel harboring the disease at home. “It scared me personally,” he says.

Soon, however, Ophir found a disconnect between the science of how Ebola spreads and how it was being portrayed in the media. For example, many stories focused on the subway rides of an infected doctor who had returned to New York City. But Ebola is spread through the exchange of bodily fluids, which is unlikely to happen on a subway, so those stories mostly served to stoke fear, Ophir says. Curious to know more, Ophir shifted his focus. “I wanted to study the way the media talks about epidemics,” he says.

One of Ophir’s early challenges was figuring out how to identify patterns in reams of documents, Cappella recalls. “He took advantage of the computational techniques that were being developed and helped develop them himself.”

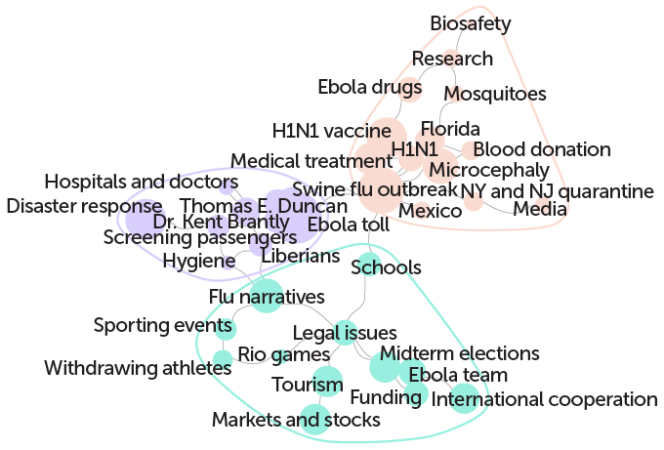

For example, Ophir automated its analysis of over 5,000 articles related to the H1N1, Ebola, and Zika epidemics in four major newspapers: New York Times, Washington Post, USA Today AND Wall Street Journal. Those articles were often at odds with the US Centers for Disease Control and Prevention’s recommendations for how to communicate information about infectious disease outbreaks, Ophir reported in May/June 2018. Health security. Few articles included practical information about what individuals could do to reduce the risk of catching and spreading the disease.

Ophir’s research convinced him that the United States was ill-prepared for an outbreak of infectious disease. “I was warning that we’re not ready for the next epidemic because we don’t know how to talk about it,” says Ophir. “Then COVID happened.”

Turning to science and the public

In recent years, Ophir and members of his lab have looked at how political polarization plays out in non-political spaces, such as app review sites. And they are beginning to try to identify fringe ideas and beliefs on extremist websites before they go mainstream. All of this work is related, says Cappella, in that it “describes the movement of information, and the movement of persuasive information, through society.”

Ophir’s latest research is a case in point. While it’s common for surveys to ask whether or not people trust science, Ophir wanted to understand people’s beliefs more nuancedly. In 2022, working in collaboration with researchers from the University of Pennsylvania’s Annenberg Center for Public Policy, he developed a survey to measure public perceptions of science and scientists. The team asked over 1,100 respondents over the phone about their political leanings and funding preferences. Ideology is linked to funding preferences, the team reported in September 2023 Proceedings of the National Academy of Sciences. For example, when conservatives perceived scientists as biased, they were less likely to support funding. The same was not true of liberals.

That work resulted in a predictive model that can estimate the gap between how science presents itself and the public perception of that presentation. Identifying such communication gaps is a key step in meeting today’s challenges, says Ophir. “We could come up with a solution to climate change tomorrow and half the country would reject it… We won’t be able to survive if we don’t learn to communicate better.”

#researcher #studies #disinformation #permeates #science #politics

Image Source : www.sciencenews.org